Challenge 011 | The Fellowship of Test Automation

- Miguel Verweij

- 1 nov 2022

- 9 minuten om te lezen

Bijgewerkt op: 28 jan 2023

You are probably thinking testing is boring and you might even be considering skipping this challenge. I have to admit that it does sound boring, and manual testing might even be so. So that's why we will learn how to automate it. The process itself is fun and you will make sure that every feature created earlier is still functional with a new release. Am I saying fun and better quality? YES INDEED! Now, are you interested?

Challenge Objectives

🎯 Learn about Power Apps Test Studio

🎯 Create test cases

🎯 Run cases and suites

🎯 Learn how to use Trace and assert for failed tests

🤓 What Is Test Automation?

If you are regularly visiting this site, you are probably creating Canvas Apps on a regular basis. You might be making apps for yourself and/or for your team, or you are creating an app for the whole company. Either way, you will probably release a version, and after using it, you might want to make adjustments due to new requirements.

It is great to add new functionality. At the same time, we must make sure that the previously developed functionality is still working. You might be testing this manually, or maybe not at all. The latter is obviously risky, and manual work can become cumbersome when you want to adopt a more agile approach.

That is exactly where Test Automation comes into play. In a nutshell, it is scripting the app interaction to test the functionality. For Canvas Apps the Test Studio has been developed for this purpose. Ideally, we want to automate every single requirement of the app, but this might not be possible in every situation. Furthermore, just relying on automation is not recommended. It is a helpful addition to your testing process. Microsoft suggests that the following tests are suited for automation:

Repetitive tasks

High business impact functionality tests

Features that are stable and not undergoing significant change

Features that require multiple data sets

Manual testing that takes significant time and effort

Within Power Apps Test Studio, each test is called a case, and cases can be bundled into suites.

First Things First

Canvas template app

Before we start testing, we will need a Canvas App. Because we are focusing on test automation, we will not create one from scratch, but install a template app. This challenge guide is using the Help Desk template app, but feel free to select another template, install a template from PnP Samples, or use an app of your own.

Go to make.powerapps.com

Create a new app

Under Start from template, select Help Desk

When the app loads, make sure that all the connections are created and allow them

Open the Settings and make sure that Formula-level error management is turned on

Save the app

Now browse through the app. This app is an example of how you can enable employees to create tickets. If you select login as a Help Desk User, you will see that option.

Power Apps Test Studio

To start creating some tests, open the Advanced Tools on the left ribbon and Open tests.

The Test Studio will now be launched. As mentioned earlier, you can see the Suite with a single Case in it. Within a case, you will specify each app interaction step. You can do that manually, but you can also record your interaction. This is similar functionality that you might know from Power Automate Desktop.

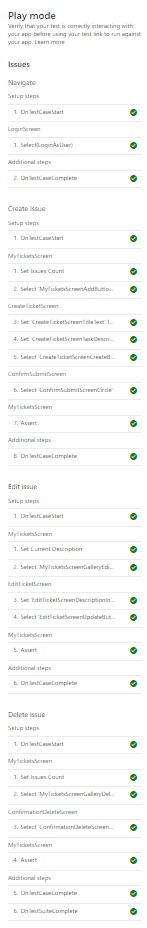

For this challenge, we will focus just on the ticketing functionality. That means creating an issue, editing an issue, and deleting an issue. You can see in the image below that those three cases have been created and the Suite has been renamed to Issues. This is a best practice to group your app functionality. You can add cases to a suite by selecting the Suite and pressing the New case button on the ribbon.

We will start with creating a new issue.

Press Record in the top ribbon

Press Login as a User

Press the Add button on the screen

Enter a Title (e.g. Challenge 011)

Enter a Description (e.g. new test issue)

Select Create

Select the confirm icon

Press Done

On the left panel, you see every app interaction is recorded. This is exactly what we are after. You will see all the steps are now listed in your case. Many items can be recorded but note that there are limitations to what is currently supported. The list should look like the image below.

The tests are saved to your app. If you want to play this test, the app must be published first. Just like you need to publish your app to make sure new functionality is added to your app. Go ahead and play the test. You will see that a new issue is automatically added to your issues list.

To be clear, the app uses local collections, so when you restart the app, the collections are empty again. However, if you are using a different data source a new record is added to that data source. This is something to be aware of during testing.

Another thing I want you to be aware of is that you just ran a single case. You can also run the whole suite. That will start all single cases in sequence. For that reason, it is best practice to have the first step within a case as the actual first step of that case. That might sound obvious, but what we just recorded is also the action to log in as a user. This is more a navigation prerequisite for the actual case, rather than a step of that case.

If all your cases require the same steps as a prerequisite, the Test Framework has the OnTestCaseStart property. We will test this by moving the first step to that property.

Copy the action expression of the first step

Press Test on the left panel

Paste the action expression in the OnTestCaseStart property

Delete the first step

You can publish the app again and do another test to see how it works. It should be the same, but now the navigation step is within the OnTestCaseStart. However, Oour cases don't require the same steps, but just once. That's why I created a navigation step that contains that single action, and I removed it from the OnTestCaseStart. You should do that too if you want to save yourself some errors later (yes, I had this issue myself). Because this is a functionality you should be aware of, I kept it in. You have to copy paste it twice now, but you will survive.

Up until now, the test case is checking if the controls are functioning. We haven't checked yet if there is a record added to the data source. This is a step that must be added manually. If you press the vertical ellipsis, you can manually add a step. In the list you will see the options Assert, SetProperty, Select, and Trace. In the list that is generated from the recording you see Set (SetProperty) and Select, so you know what those steps are doing. The Assert and Trace are new.

Trace

Trace is an optional expression that can be used to provide additional information about your test results. Each trace step will add a record to the Traces table, which is part of the TestCaseResult record. Just like there is an OnTestCaseStart property that we discussed earlier, there is an OnTestCaseComplete property that can be used at the end of a test cycle. This means that we can send information about the test result to any data source we would like when a test failed.

We will not make the error handling operational within this challenge, as that would mean we would have to create a data source just for that reason. If you want to make it work, feel free to extend your challenge. We will discuss how to make it work though.

In the OnTestCaseComplete property, you could enter a function like the snippet below. That way you could send failed tests to a specific data source where all your failed tests will be stored. If you create a data source yourself and adjust the snippet accordingly, it will create a record with all the traces as one single line of text (the Concat function). For that reason, it is helpful to create traces so that when someone is inspecting the error messages, there is a bit of context information.

If(

!TestCaseResult.Success,

Patch(

YOURDATASOURCE,

Defaults(YOURDATASOURCE),

{

TRACESCOLUMN: Concat(

TestCaseResult.Traces,

Timestamp & ": " & Message & Char(13)

)

}

)

)Assert

Assert is an expression with a Boolean output. Assertions are used to validate the expected result. Both passed and failed assertions are added to the Traces table as we discussed at th Trace section.

Because we want to make sure an issue has been added, this is the expression we will add manually to our test case. We will create a variable that hold the count of issues prior to when the creation of an issue steps are executed. That variable is input for our last step where we check if the current count is 1 more than at the beginning of the test case. That way we ensure an item has been created.

Press the vertical ellipsis of the first step

Select Insert step above

Rename the step to Set Issues Count

Open the app in Canvas Editor (edit mode) if you don't have it open still

Inspect the Items of the Issues gallery

Notice that it's a filtering of the TicketList

Copy the first snippet below. Notice it contains that same filtering

Paste it in the Action field within Test Studio

Press the vertical ellipsis of the last step

Hover on the Insert Step below arrow

Select Assert

Copy the second snippet below

Paste it in the action field of the Assert step in Test Studio

Set(issuesCountBeforeTest, CountRows(Filter(TicketList,Author = MyProfile.Mail)))Assert((issuesCountBeforeTest + 1) = CountRows(Filter(TicketList,Author = MyProfile.Mail)), "No issue created")The assertion expression is quite straightforward. The text in the assertion is the message that will be added to the Traces table.

That's about it for the first case. You can now play your test and see the result. The assertion should pass. If you are encountering some issues, try reloading the page and republishing the app. Having the app opened in Canvas Editor and Test Studio might cause some errors.

You can also update the Assert expression and remove the + 1 from the expression. That should result in a failed assertion. You can see the message is shown for context.

So. What do you think? Is this cool stuff or what? You now know everything you need to know to make all the cases work.

Delete issue

The Delete issue case should be a walk in the park by now. Remember that you can record the steps. When you open the record function there is no issue to be deleted, so you will have to create one first. You can delete those steps right after you stop the recording. After that, you set a variable and use that in the last assertion step. The only difference between the create and delete assertion is the operand (+1 at create and -1 at delete) and the message.

As it is a challenge, you go ahead and make this case work. For testing, you would need the create issue to run first. You can do that by not just running the case, but the whole suite. The steps of the Delete issue should look something like the image below.

Update issue

The update issue is a bit different but shouldn't be too hard to accomplish by now. You can again just record the steps. My test is to see if the description has changed. I collect that description in a variable and use that in my assertion. As we are nearing the end of this challenge, I share the snippet that I used in my assertion. You should be able to reproduce it with this information. And yes, I know First Filter isn't great, but it's Sunday at the time of writing.

e steps. My test is to see if the description has changed. I collect that description in a variable and use that in my assertion. As we are nearing the end of this challenge, I share the snippet that I used in my assertion. You should be able to reproduce it with this information. And yes, I know First Filter isn't great, but it's Sunday at the time of writing.

Assert(descriptionBeforeTest <> First(Filter(TicketList,Author = MyProfile.Mail)).Description, "Issue not updated")Suite run

Now that you have created all three cases, you can publish and run the whole suite. I hope you don't get any errors. You can see all the steps on the left (excuse me for this terrible layout).

You can even copy the test link and paste it into a browser to test the functionality. That way you won't have to open the app for testing. The documentation states this link can be used in DevOps pipelines, but below you will read why we will discuss that in a later stage.

Additional Information

If you are a frequent visitor, you might think about Challenge 003 where we automated the deployment of solutions over different environments. It would be amazing if we could implement these automated tests in our CI/CD pipelines.

Well, I should be honest. When I read this blog post, I knew the topic for this challenge was test automation. Test Engine is an evolution of Test Studio. The blog post promises us that soon it will be possible to reuse the tests we've created in Test Studio.

As Test Engine is a dev-oriented way of testing, I wanted to first introduce the more low-code approach to test automation. If you are over the moon about test automation and implementing it in your pipelines, you should go ahead and check out their GitHub repo.

However, I promise that when the Test Studio tests can be reused, I will create a separate challenge focusing on implementing it in your pipelines. That will be challenge 003 + challenge 011, so I think I have a topic for challenge 014... Let's hope it will be supported by then.

Key Takeaways

👉🏻 Test Studio makes testing your Canvas Apps a fun exercise

👉🏻 Test Automation will improve your app quality

👉🏻 You can use the Assert expression to evaluate functionality

👉🏻 February 2023 we take it to the next level

Comentários